The pattern is so consistent, it could be a template. A steering committee forms. Vendors are evaluated. Data readiness assessments are commissioned. Infrastructure requirements are documented. Governance frameworks are drafted. Stakeholder alignment workshops are scheduled, and rescheduled when key executives can’t attend.

Twelve months pass. Eighteen months. The organization has invested considerable amounts of time and resources in preparing to innovate with AI. Production value delivered: zero.

Meanwhile, competitors who started with messier data and less comprehensive plans are already learning from deployed solutions. They’re iterating based on real user feedback while you’re still finalizing your technology selection criteria.

This is the 18-month trap, and if your organization has been planning an AI initiative for more than six months without a working prototype, you’re likely caught in it. The sequential approach— comprehensively prepare, then execute—remains the most reliable way to guarantee your AI strategy fails.

The Sequential Planning Fallacy

The traditional approach feels logical. Reduce risk through comprehensive preparation. Build the foundation before you build on it. Get your house in order before inviting guests.

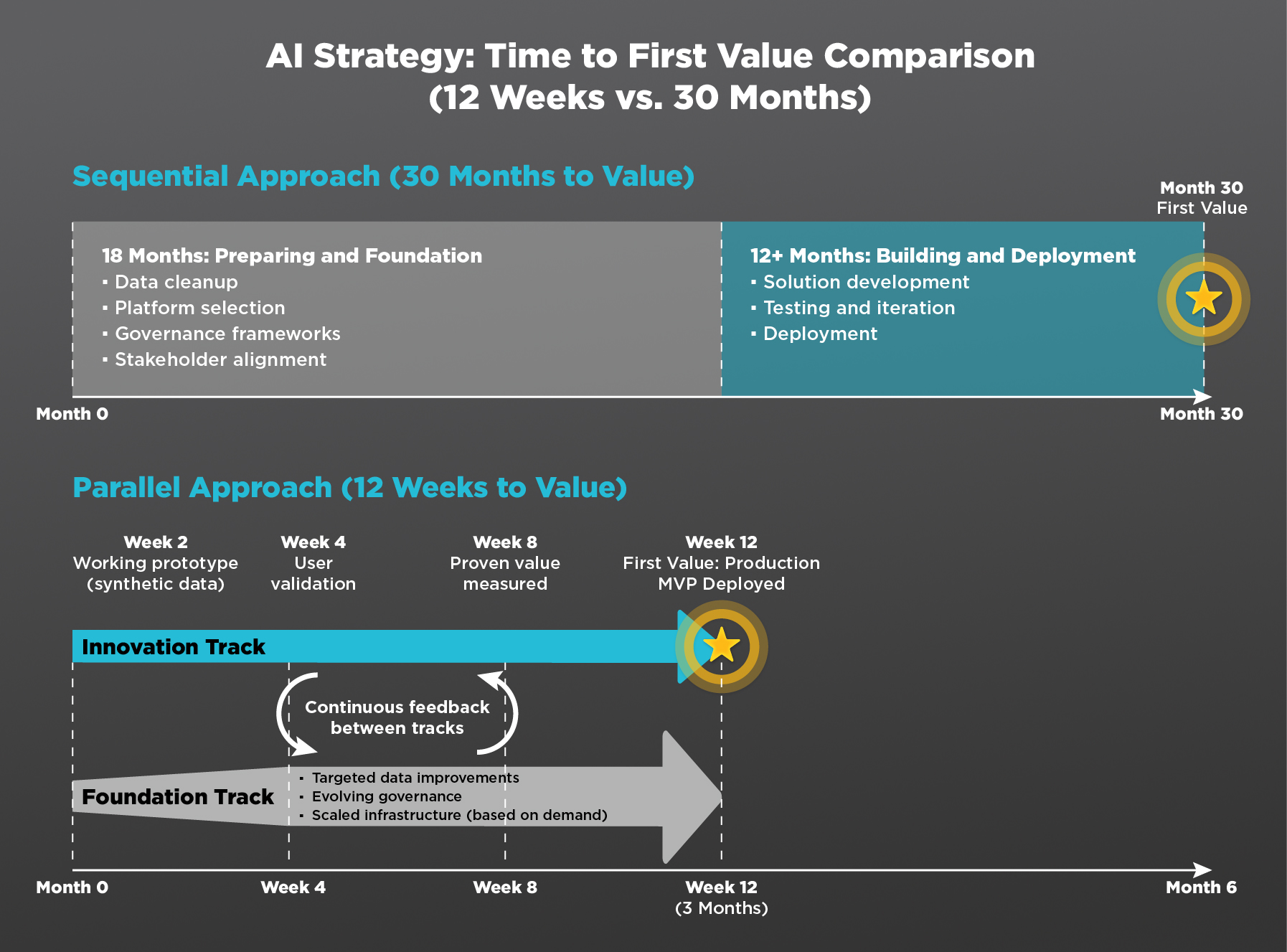

In practice, this translates into a familiar two-phase model. Phase one: spend 6 to 18 months cleaning data, building infrastructure, establishing governance, selecting platforms, training the organization, and achieving stakeholder alignment. Phase two: deploy AI solutions on this perfected foundation over the following 12 to 24 months.

The logic is sound. The results are not. Here’s why this approach consistently fails:

- Requirements change faster than foundations can be built. The data architecture you designed 14 months ago was based on use cases you understood then. The AI landscape has shifted. Your business priorities have evolved. That carefully designed foundation may no longer fit your needs.

- Teams don’t know what foundation they need until they try to build something. Data quality requirements are abstract until you’re configuring an agent. Governance needs are theoretical until you’re deploying a system that makes decisions affecting customers. Infrastructure requirements are speculative until you’re scaling a solution that works.

- Stakeholder enthusiasm doesn’t survive 18 months of preparation. The executive sponsor who championed the initiative moves to a different role. Budget priorities shift. The organizational patience for AI investment erodes with each quarter that passes without tangible results. By the time you’re ready to execute, the political capital required to succeed has been spent on readiness activities that produced nothing visible.

- The hidden cost is worse than the visible one. You’ve significantly spent to get ready. You’ve learned nothing about what actually works in your environment, with your data, for your users.

The Parallel Path Alternative

There’s a different approach, and it starts with a counterintuitive principle: run two concurrent tracks from day one, and neither waits for the other.

The Innovation Track focuses on value delivery. Start with available resources—60% to 70% data quality is fine for initial experiments. Use synthetic data if you need to. Immediately build working prototypes with the tools you have today. Test with real users in weeks not months. Deliver measurable value that justifies further investment. And critically build solutions that can migrate to production rather than throwaway proofs of concept that will need to be rebuilt from scratch.

The Foundation Track runs simultaneously, but its priorities are informed by what the innovation track reveals. Build infrastructure based on demonstrated demand rather than theoretical requirements. Let governance patterns emerge from successful experiments. Scale data quality improvements in areas that matter—the areas where prototypes have proven value. Invest in capabilities validated by actual use.

The two tracks aren’t independent. They continuously inform each other. Innovation experiments reveal what foundation elements are needed. Foundation investments accelerate what innovation can deliver; however, they advance in parallel, not in sequence.

This approach rests on an insight that’s obvious once you see it. You don’t know what data matters until you start experimenting. You don’t know what governance you need until you understand what you’re governing. You can’t design the right infrastructure until you know what you’re trying to scale.

What This Looks Like in Practice

A midsize energy company recently faced this choice. Their traditional IT planning process had produced a 14-month road map: comprehensive data cleanup, platform selection and implementation, governance framework development, and organizational change management. Only after completing this foundation work would they begin building AI solutions.

Projected time to first production value: 20 to 26 months.

They chose the parallel path instead. Week two, they had a working prototype using synthetic data to simulate equipment failure prediction. Week four, operations personnel were testing the prototype with scenarios drawn from actual historical incidents. Week eight, they had measured results: the model could identify 73% of failures with a 12-hour advance warning—enough to enable preventive intervention. Week 12, they deployed a production MVP to a single facility—not perfect nor comprehensive but functional, delivering value and generating learning that would have been impossible to achieve through planning.

The foundation track ran concurrently, but instead of speculatively building infrastructure, they now knew exactly which data sources needed quality improvements (those the model actually used), which governance decisions were urgent (those required for production deployment), and which infrastructure investments were justified (those needed to scale a proven solution).

First production value was seen in 12 weeks versus 20+ months. Foundation investments were justified by demonstrated ROI rather than PowerPoint projections.

Making the Shift

“We need to fix our data first” is the most expensive sentence in enterprise technology. It sounds prudent. Often, it’s an avoidance strategy disguised as preparation—a way to defer the harder work of actually building something and learning whether it creates value.

If you suspect your organization is caught in the sequential planning trap, ask your team three questions:

- What could we test this quarter with the data we have today—not with perfect data nor with the data we wish we had but with what exists right now?

- What’s the smallest experiment that would prove or disprove our hypothesis—not a comprehensive solution nor a production-ready system but rather the minimum viable test that would tell us if we’re on the right track?

- Are we building governance for risks we’ve actually encountered or risks we imagine? Theoretical governance frameworks have a way of expanding to address every conceivable scenario. Practical governance addresses the specific risks revealed by actual deployment.

The mindset shift is uncomfortable but necessary, going from “comprehensively prepare then execute” to “learn by doing then scale what works”; from governance as a prerequisite to governance that evolves with demonstrated need.

The Real Choice

The question isn’t whether to invest in data quality, governance, and infrastructure. Of course you should. The question is whether you wait for these foundations before delivering value or you treat foundation building and value delivery as concurrent activities that inform each other.

Organizations that wait 18 months to start innovating aren’t being careful. They’re falling behind. They’re spending resources on preparation while learning nothing about what will actually work. They’re watching competitors, who started sooner, accumulate the practical knowledge that only comes from deployment.

The parallel path approach isn’t about recklessly moving. It’s about recognizing that the fastest way to build the right foundation is to start building solutions that reveal the foundation you need.

If your AI initiatives have been in planning for six months or more without a working prototype, you have a choice to make. You can continue preparing and hope the competitive landscape waits for you, or you can start learning by doing, this quarter, with whatever resources you have today.

The 18-month trap is real, but it’s also optional.

Learn more about the Adaptive Innovation Management System (AIMS) and how the parallel path methodology can accelerate your AI initiatives.