Most shared services leaders are hearing the same pitch: AI will reduce volume and improve efficiency. That’s true, but it’s not the transformation.

The transformation is this: AI shifts the shared services focus from cases to outcomes. When done well, routine work stops being “handled” and starts being delivered end-to-end, with proof, controls, and a built-in learning loop. Shared services becomes a managed service platform because it can take action, not just answer questions.

From Cases to Outcomes: Why the Model Matters More Than the Tools

Shared services leaders are being told a familiar story about AI: fewer cases, lower costs, faster resolution. None of that is wrong, but it’s incomplete. The real shift isn’t that AI makes shared services cheaper. It’s that AI makes a different operating model possible.

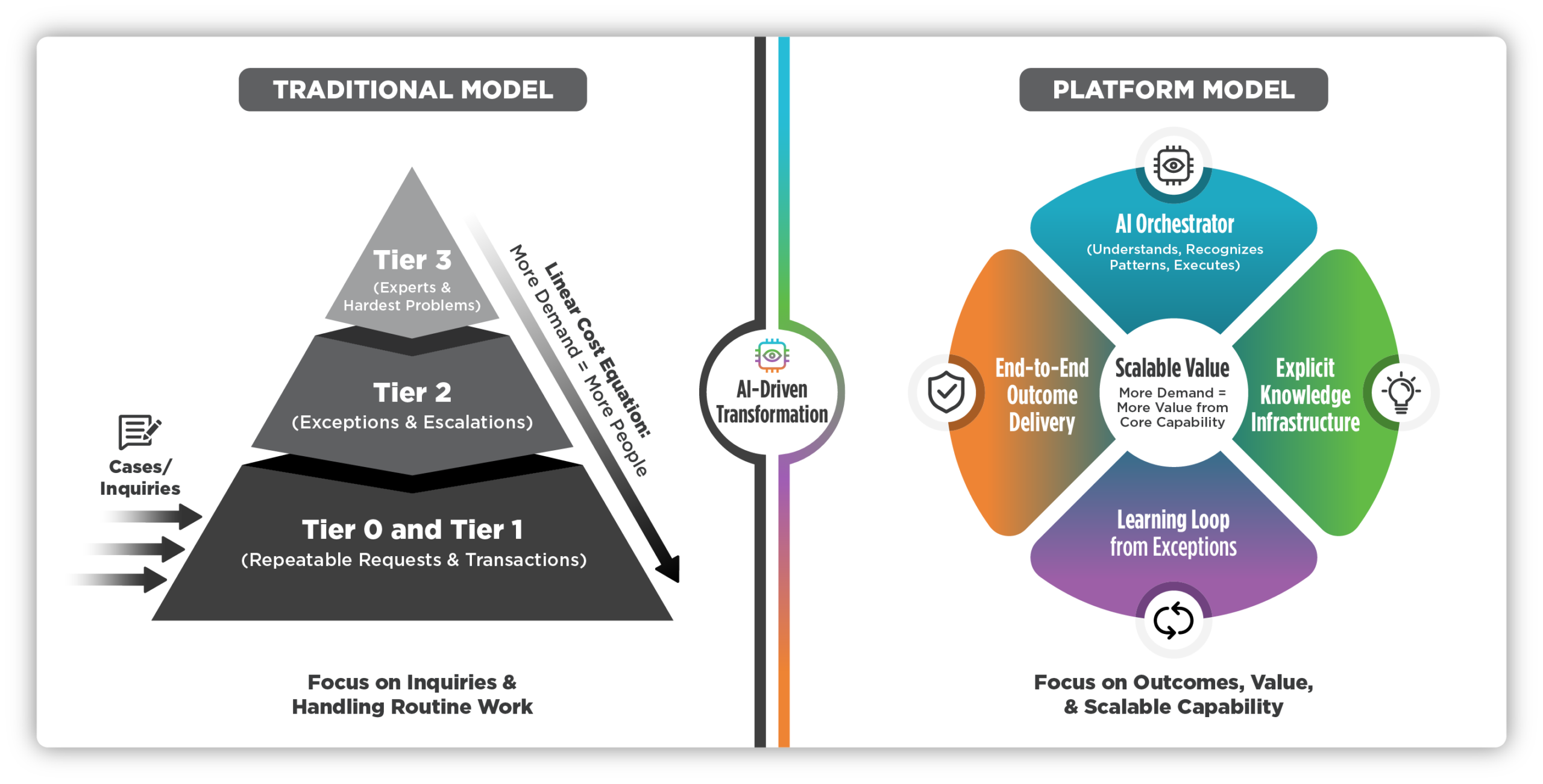

For the first time, shared services can move from managing requests to delivering outcomes end to end. Not answering questions, but completing work. Not routing cases, but executing standard services with controls, proof, and a built-in learning loop. When that happens, shared services stops behaving like a labor pyramid and starts behaving like a platform.

This distinction matters. Organizations that treat AI as a way to shave handle time will see incremental gains that plateau quickly. Organizations that redesign shared services as a platform—one that can absorb demand, learn from exceptions, and scale intelligence without adding people—will see compounding returns.

This article makes a simple case: AI doesn’t just improve shared services performance. It changes what shared services is. And leaders who don’t recognize that shift early will spend the next several years optimizing a model that no longer fits the work.

Why This Time Is Different

Shared services has undergone automation waves before. IVR systems, portals, RPA, and chatbots—each promised transformation and delivered incremental gains. Leaders are right to be skeptical.

What’s changed is that AI can now do three things together that previously required separate systems or human judgment: understand requests in plain language, recognize patterns and apply policy consistently, and execute structured work across systems. That combination is new. It’s what makes “self-service” stop meaning a portal where employees hunt for forms and start meaning an orchestrator that interprets the request, pulls the right context, collects missing details up front, executes the standard steps, and escalates only when it should.

This changes the economics. Shared services stops behaving like a linear cost equation—where more demand means more people—and starts behaving like a platform, where more demand means more value from the same core capability.

The Old Shape of Shared Services Work

Shared services has traditionally operated as a labor pyramid with a tiered workflow model. A broad base handles repeatable requests and transactions. A smaller layer manages exceptions and escalations. A thin layer of experts solves the hardest problems.

It works until it doesn’t. Volume spikes, complexity rises, and either the service suffers or you hire more people. The model scales but only by adding cost.

The New Shape of Work: AI as an Orchestrator

AI flattens that pyramid, not by eliminating people but by changing what they spend their time on.

Here’s the test: if your AI efforts focus on reducing handle time, you’re automating fragments. If you’re focused on delivering the outcome with fewer handoffs, you’re building a platform. You’re not handling cases anymore. You’re managing outcome throughput, quality, and control.

Consider a direct deposit change in HR. The old process: partial request, follow-up questions, policy checks, manual system update, verification, delayed confirmation. It’s slow, touch-heavy, and carries real risk of fraud, wrong account, and sensitive data exposure.

In a platform model, intake collects required fields up front and validates completeness. The system applies the appropriate rules based on context—country, timing, and thresholds. It executes the update and logs what happened and why. Only risky or ambiguous cases are routed to a human, and when they are, that person sees the full context and a recommended next step.

The difference isn’t just speed. It’s that exceptions become a learning loop rather than waste. Every escalation signals something: the rule is unclear, the knowledge is stale, or an upstream process is broken. Treat those signals as feedback, and cost-to-serve improvement becomes durable. Fewer repeat issues. Fewer avoidable escalations. Fewer “exceptions that became normal.”

That’s the difference between AI answering questions and AI delivering services.

Five Design Choices That Determine the Payoff

- Define what can run end to end—and where it must stop. Set boundaries by risk and ambiguity, not by org chart. In-policy, repeatable, low-risk work should flow through without human involvement. High-impact, sensitive, or genuinely novel work should be routed to people. The win isn’t pushing everything to AI. It’s building a clean operating boundary that can expand over time as you learn.

- Treat controls as the accelerator, not the brake. The instinct is to either clamp down and slow everything down or “trust the model” and hope for the best. Neither works. Speed comes from visibility: traceability to see what happened, validation where errors are costly, human approvals for sensitive categories, and escalation rules that treat uncertainty as a learning opportunity. Controls designed this way don’t slow you down—they let you move fast without losing trust.

- Make knowledge ownership explicit. In a platform model, knowledge isn’t documentation. It’s operating infrastructure. If knowledge is stale, inconsistent, or disputed, self-service fails, humans get flooded, escalations spike, and confidence collapses. Someone needs to own the sources of truth, update cadence, approvals, and the retirement of outdated guidance. And there needs to be a tight loop between what escalates and what gets fixed.

- Redesign roles around exceptions and improvement. This is where leaders often go wrong—treating AI as a headcount story rather than a redeployment story. The goal isn’t fewer people. It’s people doing different work. Frontline teams spend more time on judgment, empathy, and edge cases. Specialists spend less time on case-by-case heroics and more time preventing repeat issues. New roles emerge: AI performance monitoring, knowledge management, workflow improvement, and compliance oversight. These aren’t overhead. They’re what make the platform run.

- Manage a platform scorecard. Case volume and handle time are blunt instruments. A platform scorecard tracks cost-to-serve trends, touches per outcome, end-to-end cycle time, quality and rework rates, exception rates and their causes, and control effectiveness. Once shared services builds this discipline, it becomes exportable.

The Strategic Endgame for Shared Services

Here’s the upside many leaders miss: when shared services runs AI in production—at scale, across processes, with real performance data and real risk—it becomes one of the few places in the enterprise that actually knows how to operate AI. Not as a pilot. For real.

That experience is valuable because most functions trying to become AI-enabled hit the same walls: unclear ownership, messy knowledge, weak data, brittle automation, and governance that’s either too light to be safe or too heavy to move.

Shared services is already designed to navigate those tradeoffs. Over time, it can export reusable patterns—intake templates, escalation rules, knowledge governance, controls by design, and performance dashboards—to the rest of the enterprise. It can shift from reporting SLAs to delivering decision-useful insights, e.g., where demand is spiking, which policies create friction, which exceptions recur, and which upstream fixes will actually reduce costs.

That’s how shared services evolves from processor to partner.

The Bottom Line

Every shared services leader will get some efficiency from AI. That’s table stakes. The question is whether you stop there, with a little faster, a little cheaper, or whether you redesign around what’s now possible. The difference won’t be whether you benefit—it will be how far those benefits go.

Three years from now, some shared services organizations will be running AI as a platform—absorbing volume, learning from exceptions, exporting practices to the rest of the enterprise. Others will still be managing cases, just with better chatbots. The efficiency gains might look similar in year one. By year three, they won’t. That gap will compound.

How We Can Help

We help shared services and GBS leaders move beyond isolated AI use cases and redesign their organizations to operate as platforms. Our work focuses on three practical outcomes:

- Reframing the operating model – Assessing whether the current service delivery model is built to scale intelligence, which includes defining where end-to-end delivery is possible, where human judgment must remain, and how to establish clean operating boundaries.

- Designing for execution and trust – Translating platform concepts into concrete changes across people, process, and technology and embedding governance, controls, and accountability.

- Building toward enterprise value – Identifying which capabilities can be reused and exported across the enterprise, shifting shared services from service provider to strategic partner.

This work isn’t about adopting AI faster. It’s about redesigning shared services so the benefits of AI don’t stall after year one.